- Related Questions & Answers

What is Web Scraping Python? Web scraping is a website extraction technique that pulls vital information. Web Scraping Using Python To Create A Dataset In this article I will show you how you can create your own dataset by Web Scraping using Python. Web Scraping means to extract a set of data from web. We have learned how the urllib and request two python modules can help in web scraping from scratch, there are many ways to execute your web scraper like in the previous article we used selenium for web scraping then we combined selenium with beautiful soup and now we have integrated request module instead of selenium with beautiful soup. The Farm: Requests. The Requests library is vital to add to your data science toolkit.

- Selected Reading

Python provides different modules like urllib, requests etc to download files from the web. I am going to use the request library of python to efficiently download files from the URLs.

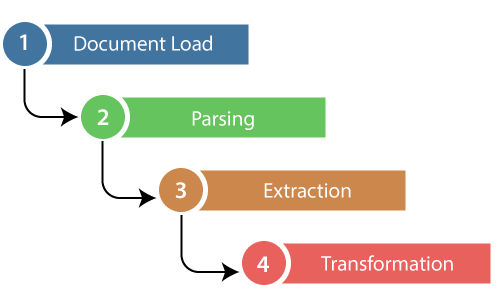

Let’s start a look at step by step procedure to download files using URLs using request library−

1. Import module

2. Get the link or url

3. Save the content with name.

Web Scraping Modules Python Examples

save the file as facebook.ico.

Example

Result

We can see the file is downloaded(icon) in our current working directory.

But we may need to download different kind of files like image, text, video etc from the web. So let’s first get the type of data the url is linking to−

However, there is a smarter way, which involved just fetching the headers of a url before actually downloading it. This allows us to skip downloading files which weren’t meant to be downloaded.

To restrict the download by file size, we can get the filezie from the content-length header and then do as per our requirement.

Get filename from an URL

To get the filename, we can parse the url. Below is a sample routine which fetches the last string after backslash(/).

Python Web Scraping Modules

Above will give the filename of the url. However, there are many cases where filename information is not present in the url for example – http://url.com/download. In such a case, we need to get the Content-Disposition header, which contains the filename information.

The above url-parsing code in conjunction with above program will give you filename from Content-Disposition header most of the time.

lxml and Requests¶

lxml is a pretty extensive library written for parsingXML and HTML documents very quickly, even handling messed up tags in theprocess. We will also be using theRequests module instead of thealready built-in urllib2 module due to improvements in speed and readability.You can easily install both using pipinstalllxml andpipinstallrequests.

Let’s start with the imports:

Next we will use requests.get to retrieve the web page with our data,parse it using the html module, and save the results in tree:

(We need to use page.content rather than page.text becausehtml.fromstring implicitly expects bytes as input.)

tree now contains the whole HTML file in a nice tree structure whichwe can go over two different ways: XPath and CSSSelect. In this example, wewill focus on the former.

XPath is a way of locating information in structured documents such asHTML or XML documents. A good introduction to XPath is onW3Schools .

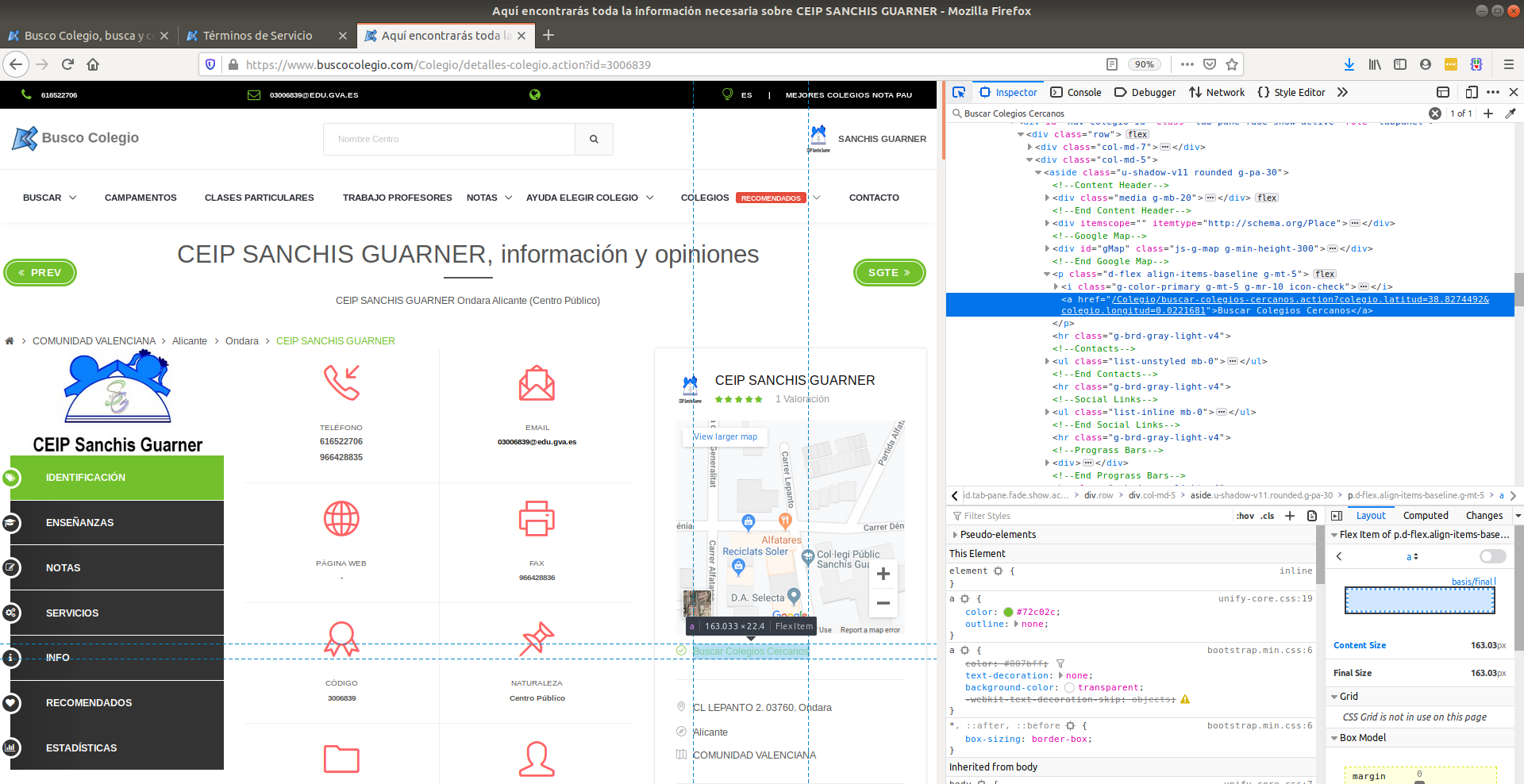

There are also various tools for obtaining the XPath of elements such asFireBug for Firefox or the Chrome Inspector. If you’re using Chrome, youcan right click an element, choose ‘Inspect element’, highlight the code,right click again, and choose ‘Copy XPath’.

After a quick analysis, we see that in our page the data is contained intwo elements – one is a div with title ‘buyer-name’ and the other is aspan with class ‘item-price’:

Knowing this we can create the correct XPath query and use the lxmlxpath function like this:

Let’s see what we got exactly:

Congratulations! We have successfully scraped all the data we wanted froma web page using lxml and Requests. We have it stored in memory as twolists. Now we can do all sorts of cool stuff with it: we can analyze itusing Python or we can save it to a file and share it with the world.

Some more cool ideas to think about are modifying this script to iteratethrough the rest of the pages of this example dataset, or rewriting thisapplication to use threads for improved speed.